Business Cybersecurity: Key Focus To Protect Your Company

- January 26

- 6 min

The demand for secure AI solutions is growing as artificial intelligence becomes a core component of enterprise operations. While AI systems enhance workflows, customer interactions, and data analysis, they also introduce new vulnerabilities that cybercriminals are eager to exploit.

Incidents like the EchoLeak flaw in Microsoft 365 Copilot highlight the risks of unsecured AI systems. This zero-click vulnerability allowed attackers to embed hidden instructions in emails, enabling unauthorized access to sensitive corporate data without user interaction. It demonstrates why security must be a foundational element when deploying AI-powered solutions.

This incident underscores the urgent need for organizations to design AI systems with security as a foundational principle rather than an afterthought.

As enterprises continue to expand their AI capabilities, they must implement comprehensive security frameworks that protect against both current threats and emerging attack vectors. The stakes are too high to approach AI security with anything less than a proactive, multi-layered strategy that safeguards sensitive data while maintaining the operational benefits that make AI so valuable to modern businesses.

A secure AI solution refers to an artificial intelligence system designed with robust security measures to protect against vulnerabilities, cyber threats, and unauthorized access. It ensures that AI applications operate safely within enterprise environments while safeguarding sensitive data and maintaining system integrity. Key features of a secure AI solution include:

The integration of artificial intelligence into business-critical applications has accelerated dramatically over the past few years. Organizations across industries are leveraging AI to streamline workflow automation, enabling systems to make decisions and take actions with minimal human oversight.

This widespread adoption has changed the enterprise technology landscape. AI systems now serve as intermediaries between users and sensitive data, often with broad access permissions that would have been unthinkable for traditional software applications.

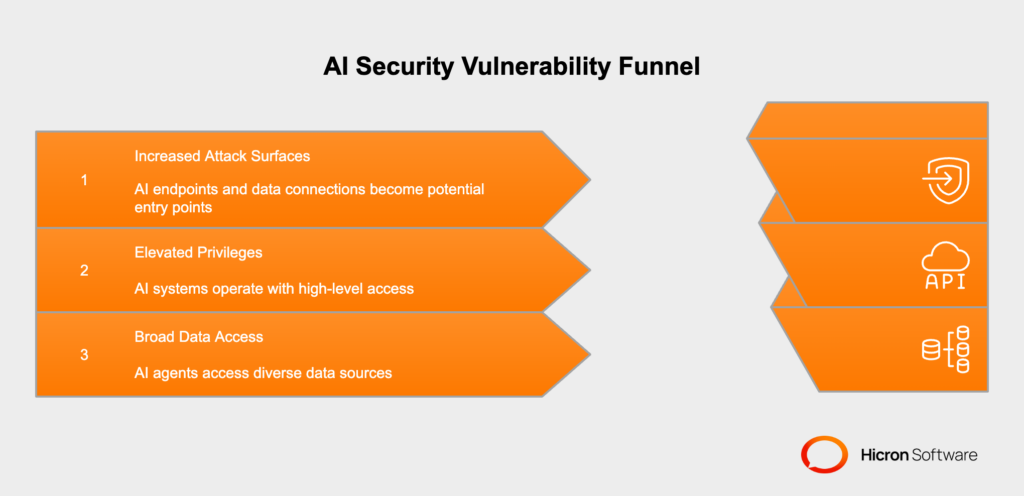

The convenience and efficiency gains are undeniable, but they come with a strong expansion of potential attack surfaces. This increased functionality also increases the need for secure AI solutions to protect sensitive information and prevent exploitative attacks. Every AI endpoint, every data connection, and every automated decision point represents a potential entry point for malicious actors seeking to exploit vulnerabilities.

The challenge is compounded by the fact that AI systems often operate with elevated privileges and access to multiple data sources simultaneously. Unlike traditional applications that might access specific databases or files, modern AI agents can pull information from

all within a single interaction. This broad access, while essential for AI functionality, creates unprecedented opportunities for data breaches if security measures are inadequate.

The EchoLeak vulnerability dramatically underscores the necessity of a secure AI solution. This flaw demonstrated a particularly insidious form of zero-click attack where malicious instructions could be embedded within seemingly legitimate email content. The attack mechanism was deceptively simple yet devastatingly effective.

Attackers could craft emails containing hidden instructions that would be processed by Copilot when the AI system analyzed the email content. These instructions could direct the AI to perform unauthorized actions, such as accessing and exfiltrating data from other emails, spreadsheets, chat conversations, and documents within the organization’s Microsoft 365 environment. The most concerning aspect of this vulnerability was that it required no user interaction whatsoever. The mere presence of the malicious email in the system was sufficient to trigger the unauthorized data access.

The EchoLeak case revealed critical weaknesses in how AI systems handle trust boundaries. The AI agent treated instructions embedded in external content with the same level of trust as legitimate user commands, failing to distinguish between authorized user instructions and potentially malicious instructions from external sources. This breakdown in trust boundary management allowed attackers to essentially hijack the AI’s capabilities and use them for unauthorized purposes.

The implications of EchoLeak extend beyond the immediate data breach potential. The vulnerability highlighted how AI systems can be manipulated to become unwitting accomplices in cyberattacks, using their legitimate access credentials and permissions to perform actions that would be impossible for external attackers to execute directly. This represents a new category of security threat where the AI system itself becomes the attack vector rather than just the target.

The lessons learned from EchoLeak have far-reaching implications that extend well beyond Microsoft’s ecosystem. Similar vulnerabilities likely exist in numerous AI-powered enterprise applications across the industry.

all potentially face similar security challenges.

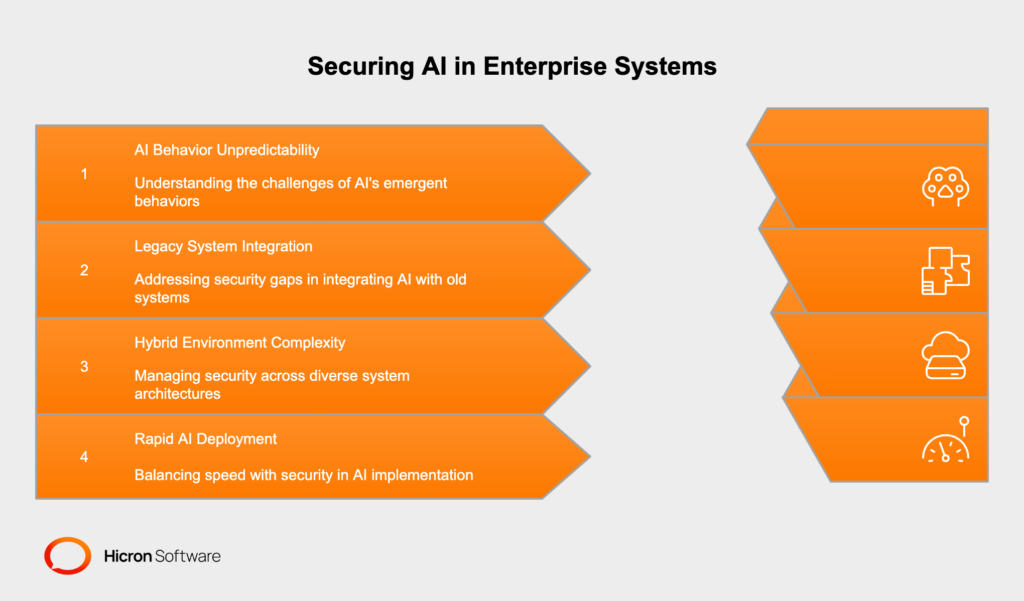

The fundamental issue lies in the unpredictable nature of AI behavior when confronted with adversarial inputs. Traditional software applications follow deterministic logic paths, making it relatively straightforward to predict and secure their behavior. AI systems, particularly those based on large language models, can exhibit emergent behaviors that are difficult to anticipate or control. This unpredictability becomes a vital security concern when AI systems have access to sensitive data and the ability to perform actions on behalf of users.

Integrating secure AI with existing enterprise systems presents additional challenges. Many organizations have legacy systems that were never designed to work with AI agents, creating potential security gaps at integration points. The complexity of modern enterprise environments, with their mix of cloud services, on-premises systems, and hybrid architectures, makes it difficult to maintain consistent security policies across all AI touchpoints.

The industry must also grapple with the speed of AI development and deployment. The competitive pressure to quickly implement AI capabilities often results in security considerations being deprioritized in favor of functionality and time-to-market. This creates a concerning pattern where security vulnerabilities are discovered after systems are already in production and handling sensitive data.

A secure AI solution must include integrations that address these challenges, provide consistent security across hybrid systems, and secure high-risk touchpoints. Organizations should prioritize security even under the pressure to quickly adopt new AI technologies.

Building secure AI systems for enterprise applications requires adherence to fundamental security principles that must be woven into every aspect of system design and operation.

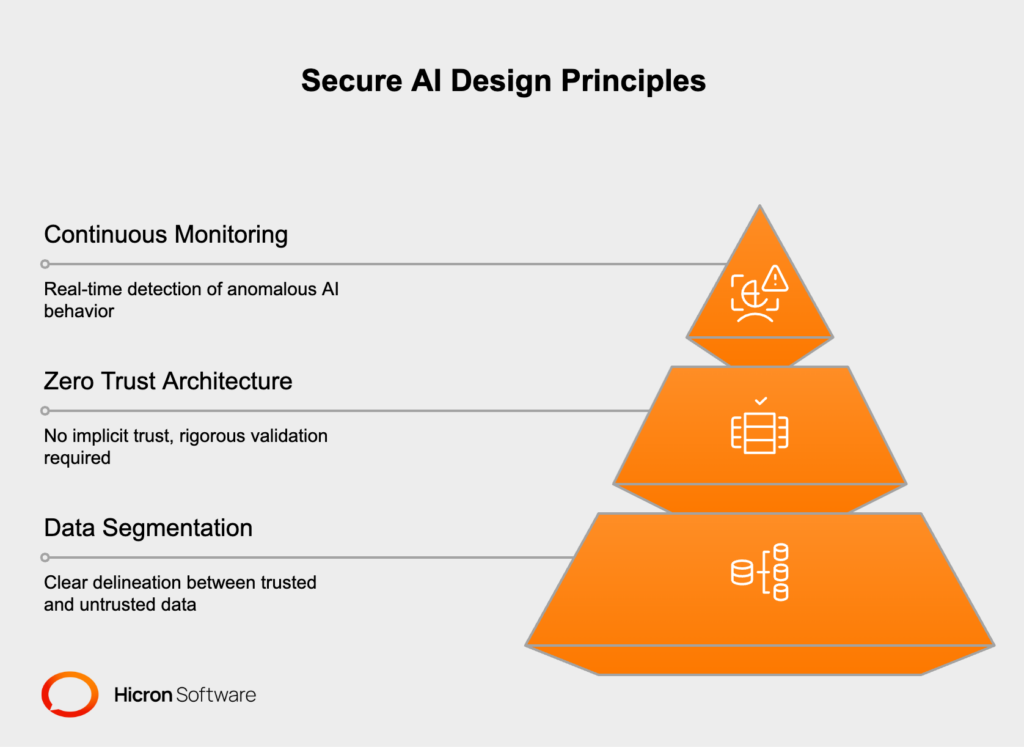

Data segmentation stands as perhaps the most critical principle, requiring clear delineation between trusted and untrusted data sources. AI systems must be designed to recognize and appropriately handle different categories of data based on their source, sensitivity, and trustworthiness. This means implementing strict controls around how external data is processed and ensuring that untrusted inputs cannot influence the AI’s behavior in ways that compromise security.

Zero Trust Architecture represents another cornerstone principle that must be applied rigorously to AI systems. Under this model, no input or request receives implicit trust, regardless of its apparent source or previous interactions. Every instruction, data request, and action must be validated and authorized before execution. For AI systems, this means implementing robust authentication and authorization mechanisms that verify not just user identity but also the legitimacy of the specific actions being requested.

Continuous monitoring forms the third pillar of secure AI design. Given the dynamic and sometimes unpredictable nature of AI behavior, organizations must implement comprehensive monitoring systems that can detect anomalous patterns in real-time. This includes monitoring not just system performance and availability, but also the content and context of AI interactions, the data being accessed, and the actions being performed. Effective monitoring systems must be capable of identifying subtle deviations from expected behavior that might indicate security compromises or attempts at manipulation.

Implementing effective AI security requires a multi-layered approach that addresses threats at multiple points in the system architecture.

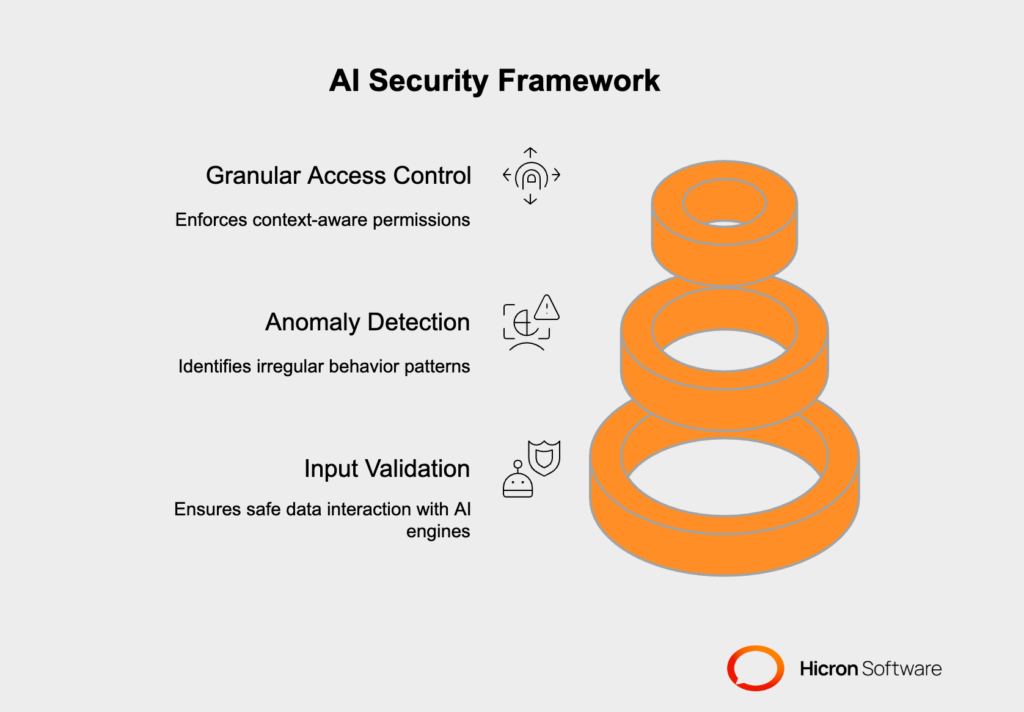

Input validation serves as the first line of defense, requiring sophisticated mechanisms to analyze and sanitize all data before it reaches the AI processing engine. This goes beyond traditional input validation techniques used in conventional applications. AI input validation must be capable of detecting subtle manipulations in natural language text, hidden instructions embedded in various data formats, and adversarial inputs designed to exploit AI-specific vulnerabilities.

Modern input validation for AI systems must employ multiple detection techniques simultaneously. Pattern recognition algorithms can identify suspicious instruction sequences, while semantic analysis can detect attempts to manipulate AI behavior through carefully crafted prompts. Additionally, input validation must be context-aware, understanding not just what data is being provided but also where it originates and what actions it might be attempting to trigger.

Anomaly detection represents the second critical security layer, utilizing machine learning-based threat intelligence to identify unusual AI behavior patterns. These systems must be trained to recognize normal operational patterns for each AI application and flag deviations that might indicate security compromises. Effective anomaly detection for AI systems must account for the legitimate variability in AI responses while identifying truly suspicious activities such as unusual data access patterns, unexpected external communications, or attempts to perform actions outside normal operational parameters.

Access control forms the third essential security layer, implementing granular, role-based permissions that govern both data access and AI operations. This requires moving beyond traditional file-based permissions to implement context-aware access controls that consider not just who is making a request, but what type of request is being made, what data is involved, and what actions are being attempted. AI systems must enforce these access controls consistently across all operations, ensuring that the AI cannot be manipulated into performing actions that exceed the user’s legitimate permissions.

Comprehensive security hardening for AI systems requires a systematic approach that addresses vulnerabilities at multiple levels.

Regular security audits must be conducted with frequencies and methodologies specifically designed for AI systems. These audits should include both automated vulnerability scanning and manual penetration testing that attempts to exploit AI-specific attack vectors. Security audits for AI systems must also include analysis of training data, model architecture, and integration points with other enterprise systems.

The audit process should include red team exercises where security professionals attempt to replicate attacks similar to EchoLeak, testing the system’s resilience against prompt injection, data exfiltration attempts, and other AI-specific attack techniques. These exercises help identify vulnerabilities before they can be exploited by malicious actors and provide valuable insights into how AI systems might be compromised in real-world scenarios.

Adversarial training represents a proactive approach to AI security hardening, involving the deliberate training of AI systems using simulated attacks and malicious inputs. This process helps AI systems learn to recognize and resist various forms of manipulation and exploitation. Adversarial training must be an ongoing process, with training datasets regularly updated to include new attack techniques and evolving threat patterns.

The training process should expose AI systems to a wide range of potential attacks, including prompt injection attempts, social engineering tactics, and data manipulation techniques. By learning to recognize these patterns during training, AI systems become more resilient to similar attacks in production environments. However, adversarial training must be balanced carefully to avoid degrading the AI’s performance on legitimate tasks.

Encryption and secure communication channels provide essential protection for data handled by AI systems. All data processed by AI agents must be encrypted both at rest and in transit, with encryption keys managed through robust key management systems. Communication between AI systems and other enterprise applications must occur through secure, authenticated channels that prevent interception or manipulation of data in transit.

The implementation of secure communication protocols must also address the unique requirements of AI systems, including the need to handle large volumes of data efficiently while maintaining security. This may require specialized encryption techniques optimized for AI workloads and secure protocols that can handle the real-time communication requirements of interactive AI systems.

|

Key Measure |

Description |

Purpose |

Notes |

|

Security Audits |

Regular evaluations using automated vulnerability scanning and manual penetration testing. |

Uncover and address vulnerabilities in AI systems, including training data and integrations. |

Frequency and methodology must be tailored to AI-specific risks and attack vectors. |

|

Red Team Exercises |

Simulated attacks to test system resilience against threats like prompt injection and data theft. |

Identify gaps before exploitation and gain insights into real-world attack scenarios. |

Helps mitigate vulnerabilities similar to the EchoLeak incident. |

|

Adversarial Training |

Training AI models with simulated malicious inputs to recognize and resist manipulation. |

Improve resilience to attacks, including social engineering and data manipulation. |

Requires ongoing updates to training datasets based on new and emerging threats. |

|

Encryption |

Secure data at rest and in transit using robust encryption techniques and key management systems. |

Protect sensitive data from unauthorized access and interception. |

Tailored encryption methods ensure security without hindering system performance. |

|

Secure Communication |

Authenticated channels for communication between AI systems and enterprise applications. |

Prevent interception and manipulation of real-time AI interactions. |

Must address high data volumes and real-time communication needs of interactive AI agents. |

Government and industry bodies are actively shaping regulations for AI deployment. Compliance frameworks tailored to address the unique characteristics of AI will be critical for ensuring the secure implementation of these systems. Organizations should collaborate with regulators and industry groups to create standards that balance innovation with the need for a secure AI solution.

Industry-wide initiatives to create best practices for secure AI deployment are gaining momentum, with various consortia and standards bodies working to establish common AI security frameworks. Organizations can participate in these initiatives to help develop standards that reflect real-world operational requirements while providing robust security protections. These collaborative efforts are noteworthy for establishing interoperability standards that ensure secure AI systems can work effectively across different platforms and vendors.

The development of compliance frameworks specifically designed for AI systems requires careful consideration of the unique characteristics of AI technology. Traditional IT compliance frameworks often fail to address AI-specific risks and requirements, necessitating the development of new approaches that account for the dynamic nature of AI systems, the complexity of their decision-making processes, and the challenges of auditing and monitoring AI behavior.

Strategic partnerships with cybersecurity firms will also enable enterprises to stay ahead of rapidly evolving threats and incorporate cutting-edge solutions.

Strategic partnerships with cybersecurity companies will be essential for organizations looking to stay ahead of evolving AI security threats. Collaborative development of secure AI solutions can also help spread the costs of security research and development across multiple organizations while ensuring that security improvements benefit the entire industry.

The human element remains one of the most critical factors in AI security, making stakeholder education a top priority for organizations implementing AI systems. A successful secure AI solution requires education at all levels.

Developers need to understand not just how to write secure code, but how to design AI systems that are inherently resistant to manipulation and exploitation.

This education should help business leaders understand the potential consequences of security vulnerabilities and the value of investing in comprehensive security measures. Business stakeholders need to appreciate that AI security is not just a technical issue but a business risk that requires appropriate investment and attention.

Promoting secure development lifecycles that emphasize testing and validation of AI systems requires changing organizational culture and processes. This includes implementing security checkpoints throughout the AI development process, requiring security reviews before AI systems are deployed to production, and establishing ongoing monitoring and assessment procedures.

The secure development lifecycle for AI systems must account for the iterative nature of AI development and the need for continuous testing and validation as AI models are updated and refined.

Creating a secure AI solution demands proactive measures to address vulnerabilities, especially in the face of incidents like EchoLeak. AI security requires a multi-layered framework that includes input validation, anomaly detection, and access control. Organizations must shift their mindset to treat AI security as an essential operational requirement.

To implement a secure AI solution, organizations should:

By prioritizing proactive security measures today, enterprises can unlock AI’s full potential without exposing themselves to unnecessary risks. A secure AI solution is the foundation for safe, efficient, and innovative enterprise operations in the AI-driven future.

AI systems face several unique security challenges, including adversarial attacks, exploitation of input validation gaps, and manipulation through malicious prompts. Additionally, their broad access to sensitive data and ability to perform privileged actions make them susceptible to becoming tools for unauthorized use. The unpredictable nature of AI behavior, especially in response to adversarial inputs, compounds these challenges.

A secure AI solution incorporates key features such as data protection (e.g., encryption), zero trust architecture, input validation to filter malicious data, anomaly detection for real-time threat response, granular access control based on roles and permissions, and adversarial resilience to withstand manipulation attempts. These features ensure robust protection against a wide range of potential vulnerabilities.

EchoLeak revealed how AI systems could process hidden commands embedded in seemingly legitimate inputs, leading to unauthorized access without user interaction. It underscored the critical need to establish robust trust boundaries, input validation, and systemic defenses in AI systems to prevent attackers from exploiting their vulnerabilities.

Organizations and stakeholders play crucial roles in implementing robust AI security. Developers must design systems resistant to manipulation, incorporating security best practices. Business leaders need to treat AI security as a strategic imperative, understanding its business risks and prioritizing education and investment in comprehensive security measures across all levels of an organization.

Proactive measures include conducting regular security audits and red team exercises, using adversarial training to expose systems to simulated attacks, encrypting sensitive data at rest and in transit, and employing secure, authenticated communication protocols. Continuous monitoring and updating security frameworks are also essential to address the dynamic and evolving nature of AI threats.

Securing an AI system involves implementing a multi-layered security approach. Key steps include strong input validation to filter malicious data, anomaly detection to identify unusual behavior, and granular access control to restrict system actions. Additionally, integrating Zero Trust Architecture ensures that no input or request is granted implicit trust. Regular security audits, application of encryption protocols, and continuous monitoring further enhance protection.

A secure AI application could be an AI-powered fraud detection system used by banks. These systems analyze transaction patterns to flag suspicious activity while operating within strict security constraints. For instance, they use encryption to protect sensitive financial data and robust access controls to limit the system’s actions to authorized personnel, ensuring security and trust in its operations.

Making AI safe requires a combination of technical safeguards and human oversight. Start by designing systems that incorporate security into their architecture, such as data segmentation and real-time monitoring. Train AI models with adversarial datasets to prepare them for potential threats. Additionally, educate stakeholders—including developers and business leaders—to implement best practices and address AI-specific vulnerabilities proactively.