Azure DevOps Scalable Build Environment with AKS

- April 17

- 24 min

Managing traffic efficiently is a fundamental challenge in modern cloud architecture, especially as organizations increasingly adopt public and hybrid cloud solutions. This is where Kubernetes Ingress comes into play—it’s a powerful tool that helps streamline and control incoming traffic to your Kubernetes services. For DevOps teams, Kubernetes Ingress is not just a convenience; it’s an essential component for achieving scalability, reliability, and seamless deployment workflows.

This article explores how Kubernetes Ingress fits into DevOps practices and offers practical insights into utilizing it effectively in public and hybrid cloud environments. Whether you want to enhance security with a tailored approach, we’ve prepared a comprehensive guide focused on securing Kubernetes Ingress to meet your organization’s unique needs. Get ready to unlock the full potential of your DevOps and cloud strategies.

Problem/challenge

The client’s infrastructure is hosted on Microsoft Azure, and access to critical services is tightly controlled for security reasons. Within the client’s office, there is a well-defined range of outgoing public IP addresses. These IPs are whitelisted, ensuring that services are only accessible from within the office network.

However, a significant challenge arises with remote work, which is becoming increasingly prevalent. Employees working remotely do not share the same public IPs as the office. Public IP addresses for remote workers are often assigned dynamically by their Internet Service Providers (ISPs), changing frequently and unpredictably. This makes it nearly impossible to maintain a reliable and up-to-date whitelist for remote users. Additionally, relying solely on IP-based whitelisting presents critical drawbacks:

To further complicate matters, the client cannot establish a VPN connection to peer their office network with the Azure infrastructure. While VPNs could provide a stable IP address for remote employees, this limitation—due to unknown reasons—leaves the organization without a straightforward alternative for secure remote access.

This challenge is particularly problematic for long-term contracts and collaborative projects involving remote employees. Without a scalable and secure solution, maintaining efficient and secure workflows becomes increasingly difficult. Employees frequently encounter disruptions, leading to delays, frustration, and a potential loss of trust in the reliability of the infrastructure.

Other potential workarounds, such as using HTTP proxies, are also infeasible due to protocol limitations. Many critical services require UDP or pure TCP access, which most HTTP proxies cannot handle effectively.

Solution Requirements

To address these challenges, the solution must:

What We Know So Far

The client’s main requirement is to authorize users based on known IP addresses, specifically from the office network. This approach works well within the office environment and should be extendable for future needs. However, remote workers present a challenge because their IP addresses are not predictable. Therefore, an alternative method of authorization is necessary to accommodate remote users.

The client’s infrastructure runs on Azure Kubernetes Service (AKS). This provides opportunities to use Kubernetes-native solutions and leverage existing tools such as Nginx Ingress to manage access to services. Additionally, secure communication is already established through the use of TLS, a robust protocol for encrypted connections.

A Theoretical Solution

One promising approach to solving this problem is mutual TLS (mTLS). Unlike standard TLS, where only the client validates the server’s certificate, mTLS allows both the client and the server to authenticate each other. This ensures that only authorized users can access services, regardless of their IP address. Here’s how this could address the client’s needs:

However, implementing mTLS introduces several considerations:

Challenges and Next Steps

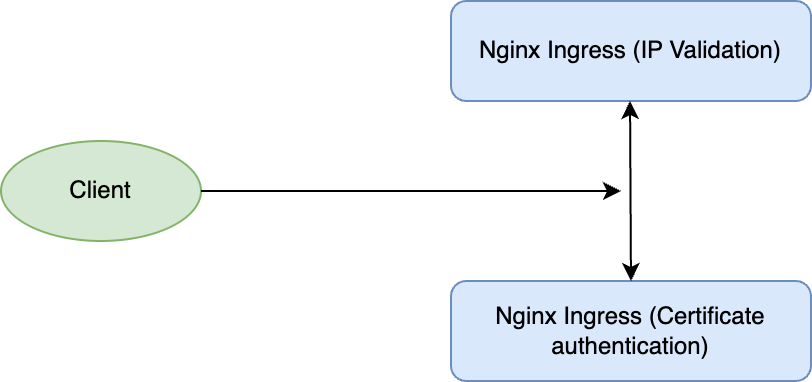

One key challenge is integrating these two mechanisms—IP-based whitelisting and mTLS—into a unified Ingress configuration. The Ingress must handle both types of authorization without introducing undue complexity or overhead.

By addressing these theoretical considerations, we can devise a solution that meets the client’s security, scalability, and flexibility requirements. Here is the explanation of what we want to achieve using one tool:

Phase 1: Test Infrastructure Preparation

The first phase involved creating the test infrastructure using Pulumi to automate the deployment.

Pulumi was chosen to define and deploy the test infrastructure for this solution, leveraging its modern approach to infrastructure-as-code and its ability to integrate seamlessly with programming workflows. Using Pulumi instead of the more commonly used Terraform highlights a fresh and innovative perspective, aligning the solution with emerging trends and showing flexibility in adopting new tools.

The infrastructure was set up entirely on Azure and consisted of:

This infrastructure served as the foundation for testing the solution. The Git repository will be shared as a source at the end of the article.

Let’s authorize the Azure tenant and then we can proceed with Pulumi.

The first operation using Pulumi is logging in to the state file store which in our case is the local file:

poc: export PULUMI_CONFIG_PASSPHRASE=""

poc: pulumi login --local

Logged in to vm.local as user (file://~)Now we can select our setup file(stack):

pulumi stack select poc --cwd infrastructureLet’s deploy our infrastructure.

poc: export ARM_SUBSCRIPTION_ID=xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx

poc: pulumi up --cwd infrastructure

Previewing update (poc):

Type Name Plan

+ pulumi:pulumi:Stack ingress-nginx-public-restrictions-poc create

+ ├─ tls:index:PrivateKey testvm1-ssh-key create

+ ├─ azure:core:ResourceGroup test create

+ ├─ random:index:RandomString testvm1-domain-label create

+ ├─ tls:index:PrivateKey testvm2-ssh-key create

+ ├─ random:index:RandomString testvm2-domain-label create

+ ├─ azure:containerservice:KubernetesCluster testAKS create

+ ├─ azure-native:network:NetworkSecurityGroup testvm2-security-group create

+ ├─ azure-native:network:NetworkSecurityGroup testvm1-security-group create

+ ├─ azure-native:network:VirtualNetwork testvm1-network create

+ ├─ azure-native:network:VirtualNetwork testvm2-network create

+ ├─ azure-native:network:PublicIPAddress testvm1-public-ip create

+ ├─ azure-native:network:PublicIPAddress testvm2-public-ip create

+ ├─ azure-native:network:NetworkInterface testvm1-security-group create

+ ├─ azure-native:network:NetworkInterface testvm2-security-group create

+ ├─ azure-native:compute:VirtualMachine testvm1 create

+ └─ azure-native:compute:VirtualMachine testvm2 create

Outputs:

kubeConfig : output<string>

vm1-ip : output<string>

vm1-privatekey: output<string>

vm2-ip : output<string>

vm2-privatekey: output<string>

Resources:

+ 17 to create

Do you want to perform this update? yes

Updating (poc):

Type Name Status

+ pulumi:pulumi:Stack ingress-nginx-public-restrictions-poc created (330s)

+ ├─ tls:index:PrivateKey testvm1-ssh-key created (0.36s)

+ ├─ random:index:RandomString testvm1-domain-label created (0.01s)

+ ├─ random:index:RandomString testvm2-domain-label created (0.02s)

+ ├─ azure:core:ResourceGroup test created (10s)

+ ├─ tls:index:PrivateKey testvm2-ssh-key created (1s)

+ ├─ azure:containerservice:KubernetesCluster testAKS created (315s)

+ ├─ azure-native:network:PublicIPAddress testvm2-public-ip created (4s)

+ ├─ azure-native:network:NetworkSecurityGroup testvm1-security-group created (2s)

+ ├─ azure-native:network:VirtualNetwork testvm2-network created (4s)

+ ├─ azure-native:network:VirtualNetwork testvm1-network created (4s)

+ ├─ azure-native:network:PublicIPAddress testvm1-public-ip created (4s)

+ ├─ azure-native:network:NetworkSecurityGroup testvm2-security-group created (2s)

+ ├─ azure-native:network:NetworkInterface testvm2-security-group created (1s)

+ ├─ azure-native:network:NetworkInterface testvm1-security-group created (1s)

+ ├─ azure-native:compute:VirtualMachine testvm2 created (48s)

+ └─ azure-native:compute:VirtualMachine testvm1 created (48s)

Outputs:

kubeConfig : [secret]

vm1-ip : "20.71.218.174"

vm1-privatekey: [secret]

vm2-ip : "20.71.218.175"

vm2-privatekey: [secret]

Resources:

+ 17 created

Duration: 5m37sCheck the access to Kubernetes API and two VMs.

poc: pulumi stack output kubeConfig --show-secrets --cwd infrastructure > kubeconfig

poc: kubectl --kubeconfig=kubeconfig get no

NAME STATUS ROLES AGE VERSION

aks-default-29200747-vmss000000 Ready <none> 130m v1.30.6poc: pulumi stack output vm1-privatekey --cwd infrastructure --show-secrets > vm1.rsa

poc: chmod 600 vm1.rsa

poc: ssh -i vm1.rsa pulumiuser@$(pulumi stack output vm1-ip --cwd infrastructure)

Linux testvm1 5.10.0-33-cloud-amd64 #1 SMP Debian 5.10.226-1 (2024-10-03) x86_64

The programs included with the Debian GNU/Linux system are free software;

the exact distribution terms for each program are described in the

individual files in /usr/share/doc/*/copyright.

Debian GNU/Linux comes with ABSOLUTELY NO WARRANTY, to the extent

permitted by applicable law.

pulumiuser@testvm1:~$ exit

logout

Connection to 20.71.218.174 closed.

poc: pulumi stack output vm2-privatekey --cwd infrastructure --show-secrets > vm2.rsa

poc: chmod 600 vm2.rsa

poc: ssh -i vm2.rsa pulumiuser@$(pulumi stack output vm2-ip --cwd infrastructure)

Linux testvm2 5.10.0-33-cloud-amd64 #1 SMP Debian 5.10.226-1 (2024-10-03) x86_64

pulumiuser@testvm2:~$ exit

logout

Connection to 20.71.218.175 closed.The test infrastructure is fully set up and operational, ready for validating all scenarios.

Phase 2: Deployment of Nginx Ingress Controller

With the infrastructure ready, the next step was to deploy a pure Nginx Ingress controller onto the AKS cluster. This deployment used a default configuration to establish a baseline for further customization.

We used the official Ingress helm chart to deploy a quickly stable Ingress Nginx version.

Helm is a package manager for Kubernetes that simplifies the deployment and management of applications by using reusable, configurable charts. It streamlines the setup process, making it easier to define, install, and upgrade complex Kubernetes resources.

The first phase, default deployment with one replica:

The values file:

ingress-nginx:

controller:

service:

enabled: true

type: LoadBalancer

externalTrafficPolicy: Local

replicaCount: 1Deployment of Ingress Nginx:

poc: helm dependency build ./ingress-nginx

Getting updates for unmanaged Helm repositories...

...Successfully got an update from the "https://kubernetes.github.io/ingress-nginx" chart repository

Saving 1 charts

Downloading ingress-nginx from repo https://kubernetes.github.io/ingress-nginx

Deleting outdated charts

poc: helm upgrade --kubeconfig=kubeconfig --install --namespace ingress-nginx --create-namespace ingress ./ingress-nginx

Release "ingress" does not exist. Installing it now.

NAME: ingress

LAST DEPLOYED: Tue Jan 7 15:27:50 2025

NAMESPACE: ingress-nginx

STATUS: deployed

REVISION: 1

TEST SUITE: NoneNow we can get the external IP for the Ingress services assigned from Azure.

poc: kubectl --kubeconfig=kubeconfig -n ingress-nginx get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-ingress-nginx-controller LoadBalancer 10.0.242.152 51.138.4.141 80:32102/TCP,443:32518/TCP 75s

ingress-ingress-nginx-controller-admission ClusterIP 10.0.57.81 <none> 443/TCP 75sQuick check that the service is accessible:

poc: curl http://51.138.4.141

<html>

<head><title>404 Not Found</title></head>

<body>

<center><h1>404 Not Found</h1></center>

<hr><center>nginx</center>

</body>

</html>Have you heard about https://nip.io/? This is a great solution for PoC to assign hosts in ingress without changing anything in any domain:

poc: curl -k https://51.138.4.141.nip.io

<html>

<head><title>404 Not Found</title></head>

<body>

<center><h1>404 Not Found</h1></center>

<hr><center>nginx</center>

</body>

</html>Thanks to this solution, we can deploy and test an example application behind Ingress. The Podinfo app will be sufficient.

The Chart.yaml change:

dependencies:

- name: ingress-nginx

version: 4.11.3

repository: https://kubernetes.github.io/ingress-nginx

- name: podinfo

version: 6.7.1

repository: https://stefanprodan.github.io/podinfoThe values.yaml changes, to set ingress for this app and test it.

ingress-nginx:

controller:

service:

enabled: true

type: LoadBalancer

externalTrafficPolicy: Local

replicaCount: 1

podinfo:

ui:

message: "It works as expected!"

ingress:

enabled: true

className: "nginx"

hosts:

- host: 51.138.4.141.nip.io

paths:

- path: /

pathType: ImplementationSpecificDeployment:

poc: helm dependency update ./ingress-nginx

Getting updates for unmanaged Helm repositories...

...Successfully got an update from the "https://stefanprodan.github.io/podinfo" chart repository

...Successfully got an update from the "https://kubernetes.github.io/ingress-nginx" chart repository

Saving 2 charts

Downloading ingress-nginx from repo https://kubernetes.github.io/ingress-nginx

Downloading podinfo from repo https://stefanprodan.github.io/podinfo

Deleting outdated charts

poc: helm upgrade --kubeconfig=kubeconfig --install --namespace ingress-nginx --create-namespace ingress ./ingress-nginx

Release "ingress" has been upgraded. Happy Helming!

NAME: ingress

LAST DEPLOYED: Wed Jan 8 12:56:32 2025

NAMESPACE: ingress-nginx

STATUS: deployed

REVISION: 2

poc: curl -k https://51.138.4.141.nip.io

{

"hostname": "ingress-podinfo-59788c475f-bwdmj",

"version": "6.7.1",

"revision": "6b7aab8a10d6ee8b895b0a5048f4ab0966ed29ff",

"color": "#34577c",

"logo": "https://raw.githubusercontent.com/stefanprodan/podinfo/gh-pages/cuddle_clap.gif",

"message": "It works as expected!",

"goos": "linux",

"goarch": "amd64",

"runtime": "go1.23.2",

"num_goroutine": "8",

"num_cpu": "2"

}The initial configuration has been completed. The Ingress Nginx and Podinfo(our example application) are ready. Now it’s time to test the options we have.

Phase 3: Configure Whitelisting and Test

In this phase, the IP whitelisting feature of the Nginx Ingress controller was configured. The public IP addresses of the two VMs were added to the whitelist to simulate a controlled office network environment.

The testing process included:

This structured approach ensured that each solution component was thoroughly tested before progressing to further enhancements, such as integrating client certificate validation with mTLS.

The whitelisting in Ingress Nginx is so easy to achieve because this is the built-in feature by annotation.

We need to add one annotation with the CIDR to be whitelisted. It is officially described here: https://github.com/kubernetes/ingress-nginx/blob/ingress-nginx-3.15.2/docs/user-guide/nginx-configuration/annotations.md#whitelist-source-range

We can whitelist our virtual machine on Azure called VM1.

poc: pulumi stack output vm1-ip --cwd infrastructure

20.71.218.174The change in the values.yaml file for the Helm deployment:

podinfo:

ui:

message: "It works as expected!"

ingress:

enabled: true

className: "nginx"

annotations:

nginx.ingress.kubernetes.io/whitelist-source-range: "20.71.218.174/32"

hosts:

- host: 51.138.4.141.nip.io

paths:

- path: /

pathType: ImplementationSpecific

poc: helm upgrade --kubeconfig=kubeconfig --install --namespace ingress-nginx --create-namespace ingress ./ingress-nginx

Release "ingress" has been upgraded. Happy Helming!

NAME: ingress

LAST DEPLOYED: Wed Jan 8 20:55:21 2025

NAMESPACE: ingress-nginx

STATUS: deployed

REVISION: 3A quick test from my machine:

poc: curl -k https://51.138.4.141.nip.io

<html>

<head><title>403 Forbidden</title></head>

<body>

<center><h1>403 Forbidden</h1></center>

<hr><center>nginx</center>

</body>

</html>Do the same from the VM1:

poc: ssh -i vm1.rsa pulumiuser@$(pulumi stack output vm1-ip --cwd infrastructure)

Linux testvm1 5.10.0-33-cloud-amd64 #1 SMP Debian 5.10.226-1 (2024-10-03) x86_64

pulumiuser@testvm1:~$ curl -k https://51.138.4.141.nip.io

{

"hostname": "ingress-podinfo-59788c475f-bwdmj",

"version": "6.7.1",

"revision": "6b7aab8a10d6ee8b895b0a5048f4ab0966ed29ff",

"color": "#34577c",

"logo": "https://raw.githubusercontent.com/stefanprodan/podinfo/gh-pages/cuddle_clap.gif",

"message": "It works as expected!",

"goos": "linux",

"goarch": "amd64",

"runtime": "go1.23.2",

"num_goroutine": "8",

"num_cpu": "2"

}We have a confirmation that the whitelisting works as expected!

Phase 4: Integration of mTLS Authentication

In this phase, we modified the authentication strategy to incorporate mutual TLS (mTLS) for enhanced security. The goal was to ensure that remote workers could authenticate securely without relying on dynamic IP whitelisting.

Key Changes:

To configure mutual TLS (mTLS) for the ingress, it was necessary to generate both a Certificate Authority (CA) certificate and client certificates using OpenSSL. The CA certificate establishes trust by signing client certificates, while the client certificates authenticate users, ensuring secure and authorized access to services.

The Ingress Nginx also supports mTLS by annotations; let’s quickly configure and test it.

The CA certificate generation and putting it as secret in the templates folder.

poc: openssl genrsa -out ca.key 4096

poc: openssl req -x509 -new -nodes -key ca.key -sha256 -days 3650 -out ca.crt

You are about to be asked to enter information that will be incorporated

into your certificate request.

What you are about to enter is what is called a Distinguished Name or a DN.

There are quite a few fields but you can leave some blank

For some fields there will be a default value,

If you enter '.', the field will be left blank.

-----

Country Name (2 letter code) [AU]:

State or Province Name (full name) [Some-State]:

Locality Name (eg, city) []:

Organization Name (eg, company) [Internet Widgits Pty Ltd]:

Organizational Unit Name (eg, section) []:

Common Name (e.g. server FQDN or YOUR name) []:

Email Address []:

poc: kubectl create secret generic mtls-ca-cert --from-file ca.crt --dry-run=client -o yaml > ingress-nginx/templates/mtls-ca-cert-secret.yamlChanges in the values.yaml file:

podinfo:

ui:

message: "It works as expected!"

ingress:

enabled: true

className: "nginx"

annotations:

nginx.ingress.kubernetes.io/auth-tls-verify-client: "on"

nginx.ingress.kubernetes.io/auth-tls-secret: "ingress-nginx/mtls-ca-cert"

hosts:

- host: 51.138.4.141.nip.io

paths:

- path: /

pathType: ImplementationSpecificUpdate the application and do some tests.

poc: helm upgrade --kubeconfig=kubeconfig --install --namespace ingress-nginx --create-namespace ingress ./ingress-nginx

Release "ingress" has been upgraded. Happy Helming!

NAME: ingress

LAST DEPLOYED: Wed Jan 8 21:12:38 2025

NAMESPACE: ingress-nginx

STATUS: deployed

REVISION: 4

poc: curl -k https://51.138.4.141.nip.io

<html>

<head><title>400 No required SSL certificate was sent</title></head>

<body>

<center><h1>400 Bad Request</h1></center>

<center>No required SSL certificate was sent</center>

<hr><center>nginx</center>

</body>

</html>Client’s certificate generation.

poc: openssl genpkey -algorithm RSA -out client.key -pkeyopt rsa_keygen_bits:2048

..+.+.....+................+........+.+..+...................+++++++++++++++++++++++++++++++++++++++*....+.......+...+..+++++++++++++++++++++++++++++++++++++++*...+.....+...+.+.........+...+........+.+.....+.+.....+...+.........+.+......+..............+.......+.....+..........+..+......+.........+...+...+.........+...+.......+...+...............+...+.........+......+..+.............+.....+.+.....+....+...........+.+..+.+.....+.+......+........+......+.+...+.........+...+...+......+...........+............+...+...+....+...+........+.+......+.....+....+...+.....+...+....+.........+.....+....+.....+..........+..+....+.....+.........+...+..........+..+.......+.....+.........++++++

......+..+.......+..+.+..+...............+..........+...+............+...........+.+.........+..+...+......+...+++++++++++++++++++++++++++++++++++++++*.+.....+++++++++++++++++++++++++++++++++++++++*...+.......+...+.....+..................+.......+..+......+...+.+...........+.............+......+...........+....+...+.....+.+..+...+......+.+..+......+..........+..+...+.+...+.....+...+...+......+.+............+...+..+............+..........+...+.....+.+..............+.......+......+..+.+..+....+...+.....+...+...+..........+.....+..........+.........+..+..........+......+...+..+...................+....................+......+.........+....+..+.............+..+.........+.........+.+...........+...+.+..............................+......+............+......+.........+..++++++

poc: openssl req -new -key client.key -out client.csr -subj "/C=US/ST=California/L=SanFrancisco/O=MyOrganization/OU=MyOrgUnit/CN=client.example.com"

poc: openssl x509 -req -in client.csr -CA ca.crt -CAkey ca.key -CAcreateserial -out client.crt -days 365

Certificate request self-signature ok

subject=C=US, ST=California, L=SanFrancisco, O=MyOrganization, OU=MyOrgUnit, CN=client.example.comTest retry:

poc: curl -k --cert client.crt --key client.key --cacert ca.crt https://51.138.4.141.nip.io

{

"hostname": "ingress-podinfo-59788c475f-bwdmj",

"version": "6.7.1",

"revision": "6b7aab8a10d6ee8b895b0a5048f4ab0966ed29ff",

"color": "#34577c",

"logo": "https://raw.githubusercontent.com/stefanprodan/podinfo/gh-pages/cuddle_clap.gif",

"message": "It works as expected!",

"goos": "linux",

"goarch": "amd64",

"runtime": "go1.23.2",

"num_goroutine": "8",

"num_cpu": "2"

}Challenge Encountered: While configuring mTLS authentication, we discovered an important limitation: it is not possible to simultaneously enforce IP-based whitelisting and mTLS authentication in a single request. The Nginx Ingress controller does not natively support both mechanisms at the same time in one request due to the way it handles incoming traffic.

To overcome this limitation, we had to consider a workaround allowing both features to coexist.

Phase 5: Implementing LUA Script for Dual Authentication

NOTE: Hicron Software is not responsible for code snippets presented in the article and those should be used at own risk.

Given the limitations of the Nginx Ingress controller, we explored using Lua scripts to create a custom solution. Lua scripts allow for fine-grained control over request handling, enabling the combination of both IP-based whitelisting and mTLS authentication.

We implemented a Lua script within the Nginx configuration that performed the following:

If both conditions (valid IP and valid client certificate) were satisfied, the request would be allowed to proceed. We consider the offboarding process for employees or clients with the client certificate. Fingerprint validation is the solution we are looking for!

To add LUA script, we need to enable snippet annotation for configuration in our Ingress Nginx deployment in values.yaml file:

ingress-nginx:

controller:

service:

enabled: true

type: LoadBalancer

externalTrafficPolicy: Local

replicaCount: 1

allowSnippetAnnotations: trueThe LUA script covers:

Fingerprint generation:

poc: openssl x509 -in client.crt -noout -fingerprint -sha1 | awk -F= '{print $2}' | sed 's/://g'

C143CF80EA70635C0ABACA634F0D06837DC31004Here’s a sample configuration for integrating both IP whitelisting and mTLS authentication using Lua:

podinfo:

ui:

message: "It works as expected!"

ingress:

enabled: true

className: "nginx"

annotations:

nginx.ingress.kubernetes.io/auth-tls-verify-client: "optional"

nginx.ingress.kubernetes.io/auth-tls-secret: "ingress-nginx/mtls-ca-cert"

nginx.ingress.kubernetes.io/configuration-snippet: |

# Load the IP whitelist from the mounted ConfigMap file

set_by_lua_block $ip_whitelist {

local function escape_pattern(s)

return (s:gsub("([^%w])", "\\%1")) -- Escape special characters with backslash

end

local whitelist = ""

local f = io.open("/etc/nginx/configmaps/whitelist-ips", "r")

if f then

for line in f:lines() do

local escaped_line = escape_pattern(line:match("^%s*(.-)%s*$")) -- Trim and escape

if whitelist == "" then

whitelist = escaped_line

else

whitelist = whitelist .. "|" .. escaped_line

end

end

f:close()

end

-- Log the resolved whitelist

ngx.log(ngx.ERR, "Resolved IP Whitelist: ", whitelist)

return whitelist

}

# Allow access if the IP is whitelisted or if the client certificate is valid

set $allow_access 0;

set_by_lua_block $ip_valid {

local client_ip = ngx.var.remote_addr

local whitelist_regex = ngx.var.ip_whitelist

-- Perform regex match

local matched = ngx.re.match(client_ip, whitelist_regex)

if not matched then

return 0

end

return 1

}

# Check the client certificate in Lua and validate

set_by_lua_block $client_cert_valid {

local client_cert = ngx.var.ssl_client_cert

local client_verify = ngx.var.ssl_client_verify

ngx.log(ngx.ERR, "Debug: Client Cert: ", client_cert)

ngx.log(ngx.ERR, "Debug: Client Cert Verify Status: ", client_verify)

local client_cert_fingerprint = ngx.var.ssl_client_fingerprint

ngx.log(ngx.ERR, "Debug: Client Cert Fingerprint: ", ngx.var.ssl_client_fingerprint)

-- Check if the client certificate fingerprint is in the allowed list

local matched_cert = false

local f = io.open("/etc/nginx/configmaps/allowed-cert-fingerprints", "r")

for fingerprint in f:lines() do

ngx.log(ngx.ERR, "Debug: fingerprint on list: ", fingerprint)

if client_cert_fingerprint == string.lower(fingerprint) then

matched_cert = true

break

end

end

ngx.log(ngx.ERR, "Debug: Client Cert Fingerprint matched: ", matched_cert)

if client_verify == "SUCCESS" and matched_cert then

return 1

else

return 0

end

}

if ($ip_valid = 1) {

set $allow_access 1;

}

if ($client_cert_valid = 1) {

set $allow_access 1;

}

if ($allow_access = 0) {

return 403; # Deny access

}

hosts:

- host: 51.138.4.141.nip.io

paths:

- path: /

pathType: ImplementationSpecificThere is a crucial change in the annotation nginx.ingress.kubernetes.io/auth-tls-verify-client It must be set as an optional to do optional client certificate validation against the CAs from auth-tls-secret.

The request fails with status code 400 (Bad Request) when a certificate is provided that is not signed by the CA. When no or an otherwise invalid certificate is provided, the request does not fail, but instead the verification result is sent to the upstream service.

You may noticed we have a configuration in the file /etc/nginx/configmaps/whitelist-ips

The config map content:

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-whitelist-config

data:

whitelist-ips: |

20.71.218.174/32

allowed-cert-fingerprints: |

A6A61274AECF274B69328455F0DAF0D9143209F4We should also mount it in the Ingress Nginx deployment:

ingress-nginx:

controller:

service:

enabled: true

type: LoadBalancer

externalTrafficPolicy: Local

replicaCount: 1

allowSnippetAnnotations: true

extraVolumes:

- name: whitelist-config

configMap:

name: nginx-whitelist-config

extraVolumeMounts:

- name: whitelist-config

mountPath: /etc/nginx/configmaps

readOnly: trueIt is time to update both apps and take a test:

poc: helm upgrade --kubeconfig=kubeconfig --install --namespace ingress-nginx --create-namespace ingress ./ingress-nginx

Release "ingress" has been upgraded. Happy Helming!

NAME: ingress

LAST DEPLOYED: Thu Jan 9 12:46:44 2025

NAMESPACE: ingress-nginx

STATUS: deployed

REVISION: 5Final testing time:

poc: ssh -i vm1.rsa pulumiuser@$(pulumi stack output vm1-ip --cwd infrastructure)

Linux testvm1 5.10.0-33-cloud-amd64 #1 SMP Debian 5.10.226-1 (2024-10-03) x86_64

pulumiuser@testvm1:~$ curl -k https://51.138.4.141.nip.io

{

"hostname": "ingress-podinfo-59788c475f-bwdmj",

"version": "6.7.1",

"revision": "6b7aab8a10d6ee8b895b0a5048f4ab0966ed29ff",

"color": "#34577c",

"logo": "https://raw.githubusercontent.com/stefanprodan/podinfo/gh-pages/cuddle_clap.gif",

"message": "It works as expected!",

"goos": "linux",

"goarch": "amd64",

"runtime": "go1.23.2",

"num_goroutine": "8",

"num_cpu": "2"

}poc: ssh -i vm2.rsa pulumiuser@$(pulumi stack output vm2-ip --cwd infrastructure)

Linux testvm2 5.10.0-33-cloud-amd64 #1 SMP Debian 5.10.226-1 (2024-10-03) x86_64

pulumiuser@testvm2:~$ curl -k https://51.138.4.141.nip.io

<html>

<head><title>403 Forbidden</title></head>

<body>

<center><h1>403 Forbidden</h1></center>

<hr><center>nginx</center>

</body>

</html>poc: scp -i vm2.rsa {client.crt,client.key,ca.crt} pulumiuser@$(pulumi stack output vm2-ip --cwd infrastructure):/tmp/

client.crt 100% 1655 56.1KB/s 00:00

client.key 100% 1704 57.3KB/s 00:00

ca.crt

poc: ssh -i vm2.rsa pulumiuser@$(pulumi stack output vm2-ip --cwd infrastructure)

Linux testvm2 5.10.0-33-cloud-amd64 #1 SMP Debian 5.10.226-1 (2024-10-03) x86_64

pulumiuser@testvm2:~$ curl -k --cert /tmp/client.crt --key /tmp/client.key --cacert /tmp/ca.crt https://51.138.4.141.nip.io

{

"hostname": "ingress-podinfo-59788c475f-bwdmj",

"version": "6.7.1",

"revision": "6b7aab8a10d6ee8b895b0a5048f4ab0966ed29ff",

"color": "#34577c",

"logo": "https://raw.githubusercontent.com/stefanprodan/podinfo/gh-pages/cuddle_clap.gif",

"message": "It works as expected!",

"goos": "linux",

"goarch": "amd64",

"runtime": "go1.23.2",

"num_goroutine": "8",

"num_cpu": "2"

}Explanation:

$allowed_ip variable is set to 1 for whitelisted IPs (20.71.218.174/32) and 0 for others.$mTLS_authenticated variable checks if the client certificate is validated successfully ($ssl_client_verify = SUCCESS).Phase 6: Testing and Validation

After implementing the Lua script, the following tests were conducted:

This approach successfully combined IP whitelisting and mTLS authentication, offering secure access to services for both internal users and remote workers.

Cleanup

poc: pulumi destroy --cwd infrastructure

Previewing destroy (poc):

Type Name Plan

- pulumi:pulumi:Stack ingress-nginx-public-restrictions-poc delete

- ├─ azure-native:network:NetworkInterface testvm2-security-group delete

- ├─ random:index:RandomString testvm2-domain-label delete

- ├─ azure:containerservice:KubernetesCluster testAKS delete

- ├─ azure-native:network:NetworkSecurityGroup testvm1-security-group delete

- ├─ tls:index:PrivateKey testvm2-ssh-key delete

- ├─ azure-native:compute:VirtualMachine testvm2 delete

- ├─ azure-native:compute:VirtualMachine testvm1 delete

- ├─ azure-native:network:PublicIPAddress testvm2-public-ip delete

- ├─ azure-native:network:VirtualNetwork testvm2-network delete

- ├─ azure-native:network:NetworkSecurityGroup testvm2-security-group delete

- ├─ random:index:RandomString testvm1-domain-label delete

- ├─ azure-native:network:NetworkInterface testvm1-security-group delete

- ├─ azure-native:network:PublicIPAddress testvm1-public-ip delete

- ├─ azure-native:network:VirtualNetwork testvm1-network delete

- ├─ azure:core:ResourceGroup test delete

- └─ tls:index:PrivateKey testvm1-ssh-key delete

Outputs:

- kubeConfig : [secret]

- vm1-ip : "20.71.218.174"

- vm1-privatekey: [secret]

- vm2-ip : "20.71.218.175"

- vm2-privatekey: [secret]

Resources:

- 17 to delete

Do you want to perform this destroy? yes

Destroying (poc):

Type Name Status

- pulumi:pulumi:Stack ingress-nginx-public-restrictions-poc deleted (0.06s)

- ├─ azure-native:compute:VirtualMachine testvm2 deleted (44s)

- ├─ azure-native:compute:VirtualMachine testvm1 deleted (44s)

- ├─ azure-native:network:NetworkInterface testvm2-security-group deleted (5s)

- ├─ azure-native:network:NetworkInterface testvm1-security-group deleted (4s)

- ├─ azure-native:network:PublicIPAddress testvm1-public-ip deleted (11s)

- ├─ azure:containerservice:KubernetesCluster testAKS deleted (222s)

- ├─ azure-native:network:NetworkSecurityGroup testvm1-security-group deleted (1s)

- ├─ azure-native:network:PublicIPAddress testvm2-public-ip deleted (10s)

- ├─ azure-native:network:VirtualNetwork testvm1-network deleted (11s)

- ├─ azure-native:network:VirtualNetwork testvm2-network deleted (11s)

- ├─ azure-native:network:NetworkSecurityGroup testvm2-security-group deleted (2s)

- ├─ tls:index:PrivateKey testvm2-ssh-key deleted (0.02s)

- ├─ tls:index:PrivateKey testvm1-ssh-key deleted (0.07s)

- ├─ azure:core:ResourceGroup test deleted (49s)

- ├─ random:index:RandomString testvm2-domain-label deleted (0.04s)

- └─ random:index:RandomString testvm1-domain-label deleted (0.09s)

Outputs:

- kubeConfig : [secret]

- vm1-ip : "20.71.218.174"

- vm1-privatekey: [secret]

- vm2-ip : "20.71.218.175"

- vm2-privatekey: [secret]

Resources:

- 17 deleted

Duration: 5m23sBy implementing the described architecture and tools, configuring and deploying the mTLS solution has been significantly streamlined, saving hours of manual work. In practice, deploying this solution involves running scripts to automate certificate generation, configuring the Ingress with a Helm chart, and seamlessly integrating it with the existing infrastructure quickly and repeatedly.

For example, an administrator can run a script to generate the necessary certificates and then update the Kubernetes Ingress configuration with a single Helm command, enabling full-service security for both on-site and remote employees in just a few minutes.

The repository with configuration can be found here: https://github.com/hicsh/kubernetes-nginx-public-hybrid-access

Managing access in hybrid cloud environments presents unique challenges, particularly given the growing need for secure and flexible solutions. This article explored how Kubernetes Ingress addresses these challenges by streamlining traffic management while integrating advanced security protocols like mutual TLS (mTLS). From IP whitelisting to certificate-based authentication, we demonstrated practical techniques to optimize both accessibility and security for public and hybrid cloud setups.

By leveraging Kubernetes Ingress in your DevOps practices, you can significantly boost scalability, ensure robust security, and simplify workflows—all essential factors for efficient cloud management. Implementing mTLS adds a crucial layer of trust, offering authentication solutions that adapt seamlessly to remote work scenarios without compromising security or productivity.

We encourage you to explore these strategies further and consider how they could enhance your organization’s cloud infrastructure. Check out our DevOps platform. For additional resources or guidance, contact us to tailor these solutions to your specific needs. The potential for improved efficiency and security awaits—start transforming your DevOps processes today.